WASHINGTON – Chatbots spouting falsehoods, face-swapping apps crafting porn movies and cloned voices defrauding corporations of hundreds of thousands — the scramble is on to rein in AI deepfakes which have change into a misinformation tremendous spreader.

Artificial Intelligence is redefining the proverb “seeing is believing,” with a deluge of photos created out of skinny air and folks proven mouthing issues they by no means stated in real-looking deepfakes which have eroded on-line belief.

“Yikes. (Definitely) not me,” tweeted billionaire Elon Musk final 12 months in a single vivid instance of a deepfake video that confirmed him selling a crypto forex rip-off.

China lately adopted expansive guidelines to control deepfakes however most nations seem like struggling to maintain up with the fast-evolving expertise amid issues that regulation may stymie innovation or be misused to curtail free speech.

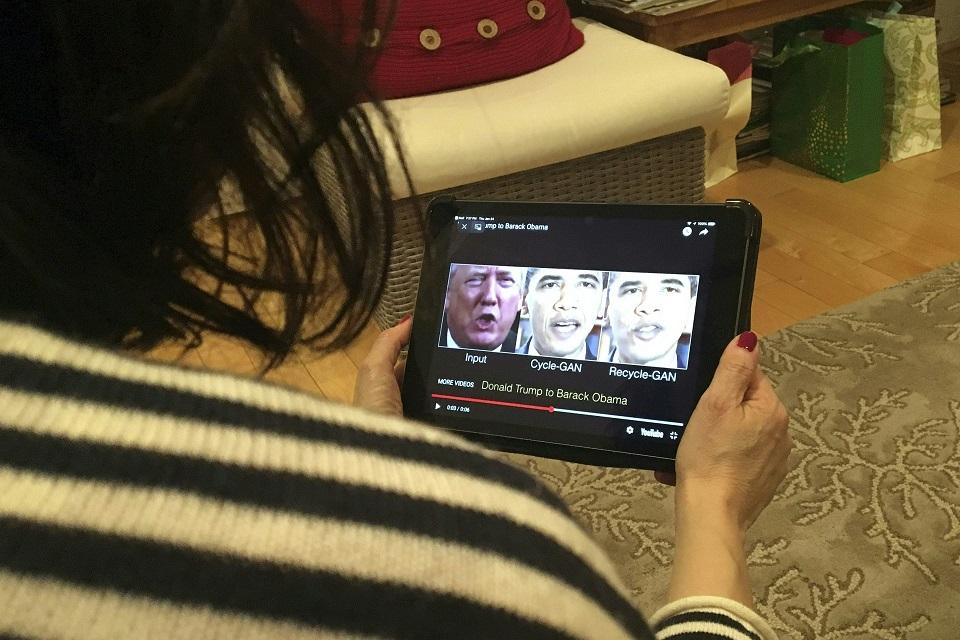

Experts warn that deepfake detectors are vastly outpaced by creators, who’re onerous to catch as they function anonymously utilizing AI-based software program that was as soon as touted as a specialised talent however is now broadly accessible at low price.

Facebook proprietor Meta final 12 months stated it took down a deepfake video of Ukrainian President Volodymyr Zelensky urging residents to put down their weapons and give up to Russia.

And British campaigner Kate Isaacs, 30, stated her “heart sank” when her face appeared in a deepfake porn video that unleashed a barrage of on-line abuse after an unknown person posted it on Twitter.

“I remember just feeling like this video was going to go everywhere — it was horrendous,” Isaacs, who campaigns in opposition to non-consensual porn, was quoted as saying by the BBC in October.

The following month, the British authorities voiced concern about deepfakes and warned of a preferred web site that “virtually strips women naked.”

‘Information apocalypse’

With no obstacles to creating AI-synthesized textual content, audio and video, the potential for misuse in identification theft, monetary fraud and tarnish reputations has sparked international alarm.

The Eurasia group referred to as the AI instruments “weapons of mass disruption.”

“Technological advances in artificial intelligence will erode social trust, empower demagogues and authoritarians, and disrupt businesses and markets,” the group warned in a report.

“Advances in deepfakes, facial recognition, and voice synthesis software will render control over one’s likeness a relic of the past.”

This week AI startup ElevenLabs admitted that its voice cloning device might be misused for “malicious purposes” after customers posted a deepfake audio purporting to be actor Emma Watson studying Adolf Hitler’s biography “Mein Kampf.”

The rising quantity of deepfakes might result in what the European legislation enforcement company Europol described as an “information apocalypse,” a situation the place many individuals are unable to tell apart truth from fiction.

“Experts fear this may lead to a situation where citizens no longer have a shared reality or could create societal confusion about which information sources are reliable,” Europol stated in a report.

That was demonstrated final weekend when NFL participant Damar Hamlin spoke to his followers in a video for the primary time since he suffered a cardiac arrest throughout a match.

Hamlin thanked medical professionals answerable for his restoration, however many who believed conspiracy theories that the COVID-19 vaccine was behind his on-field collapse baselessly labelled his video a deepfake.

‘Super spreader’

China enforced new guidelines final month that can require companies providing deepfake companies to acquire the true identities of their customers. They additionally require deepfake content material to be appropriately tagged to keep away from “any confusion.”

The guidelines got here after the Chinese authorities warned that deepfakes current a “danger to national security and social stability.”

In the United States, the place lawmakers have pushed for a job drive to police deepfakes, digital rights activists warning in opposition to legislative overreach that would kill innovation or goal respectable content material.

The European Union, in the meantime, is locked in heated discussions over its proposed “AI Act.”

The legislation, which the EU is racing to move this 12 months, would require customers to reveal deepfakes however many concern the laws may show toothless if it doesn’t cowl inventive or satirical content material.

“How do you reinstate digital trust with transparency? That is the real question right now,” Jason Davis, a analysis professor at Syracuse University, instructed AFP.

“The (detection) tools are coming and they’re coming relatively quickly. But the technology is moving perhaps even quicker. So like cyber security, we will never solve this, we will only hope to keep up.”

Many are already struggling to grasp advances similar to ChatGPT, a chatbot created by the US-based OpenAI that’s able to producing strikingly cogent texts on virtually any matter.

In a examine, media watchdog NewsGuard, which referred to as it the “next great misinformation super spreader,” stated a lot of the chatbot’s responses to prompts associated to matters similar to Covid-19 and college shootings have been “eloquent, false and misleading.”

“The results confirm fears… about how the tool can be weaponized in the wrong hands,” NewsGuard stated. — Agence France-Presse

Source: www.gmanetwork.com